Eigenvalues are properly one of the most important metrics which can be extracted from matrices. Together with their corresponding eigenvector, they form the fundamental basis for many applications. Calculating eigenvalues from a given matrix is straightforward and implementations exist in many libraries. But sometimes the concrete matrix is not known in advance, e.g. when the matrix values are based on some bounded input data. In this case, it may be good to give at least some estimation of the range in which the eigenvalues can lie. As the name of this article suggests, there is a theorem intended for this use case and which will be discussed here.

What it does

For a square \( n \times n\) matrix \(A\) the Gershgorin circle theorem returns a range in which the eigenvalues must lie by simply using the information from the rows of \(A\). Before looking into the theorem though, let me remind the reader that eigenvalues may be complex valued (even for a matrix which contains only real numbers). Therefore, the estimation lives in the complex plane, meaning we can visualize the estimation in a 2D coordinate system with the real part as \(x\)- and the imaginary part as the \(y\)-axis. Note also that \(A\) has a maximum of \(n\) distinct eigenvalues.

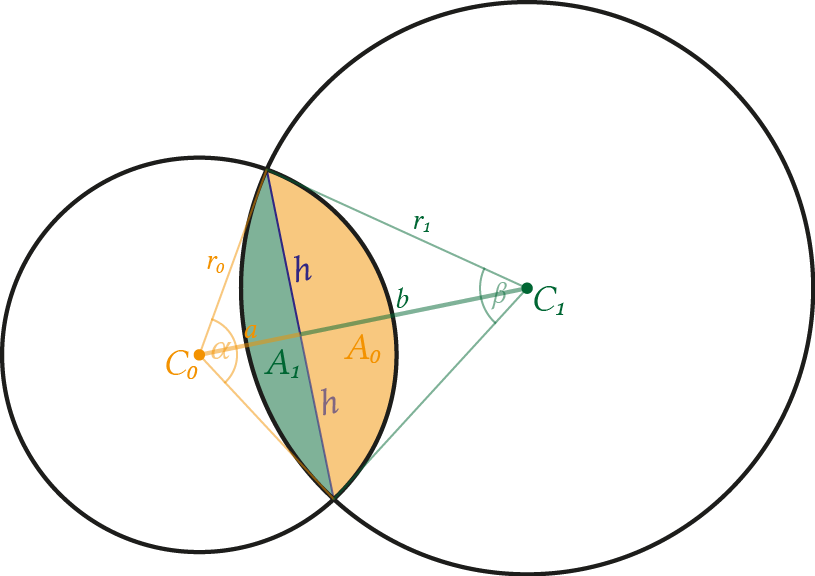

For the theorem, the concept of a Gershgorin disc is relevant. Such a disk exists for each row of \(A\), is centred around the diagonal element \(a_{ii}\) (which may be complex as well) and the sum of the other elements in the row \(r_i\) constraint the radius. The disk is therefore defined as

\begin{equation} \label{eq:GershgorinCircle_Disc} C_i = \left\{c \in \mathbb{C} : \left| c - a_{ii} \right| \leq r_i\right\} \end{equation}with the corresponding row sum

\begin{equation} \label{eq:GershgorinCircle_Disc_RowSum} r_i = \sum_{j=1,\\ j\not=i}^n \left|a_{ij}\right| \end{equation}(absolute sum of all row values except the diagonal elements itself). As an example, let's take the following definition for

\begin{equation*} A = \begin{pmatrix} 4 & 3 & 15 \\ 1 & 1+i & 5 \\ -8 & -2 & 22 \end{pmatrix}. \end{equation*}There are three Gershgorin discs in this matrix:

- \(C_1\) with the centre point \(a_{11} = 4\) and radius \(r_1 = \left|3\right| + \left|15\right| = 18\)

- \(C_2\) with the centre point \(a_{22} = 1+i\) and radius \(r_2 = \left|1\right| + \left|5\right| = 6\)

- \(C_3\) with the centre point \(a_{33} = 22\) and radius \(r_3 = \left|-8\right| + \left|-2\right| = 10\)

We have now all ingredients for the statement of the theorem:

Every eigenvalue \(\lambda \in \mathbb{C}\) of a square matrix \(A \in \mathbb{R}^{n \times n}\) lies in at least one of the Gershgorin discs \(C_i\) (\eqref{eq:GershgorinCircle_Disc}). The possible range of the eigenvalues is defined by the outer borders of the union of all discs

\begin{equation*} C = \bigcup_{i=1}^{n} C_i. \end{equation*}The union, in the case of the example, is \(C = C_1 \cup C_2 \cup C_3\) and based on the previous information of the discs we can now visualize the situation in the complex plane. In the following figure, the discs are shown together with their disc centres and the actual eigenvalues (which are all complex in this case)

\begin{equation*} \lambda_1 = 13.4811 - 7.48329 i, \quad \lambda_2 = 13.3749 + 7.60805 i \quad \text{and} \quad \lambda_3 = 0.14402 + 0.875241 i. \end{equation*}Indeed, all eigenvalues lie in the blue area defined by the discs. But you also see from this example that not all discs have to contain an eigenvalue (the theorem does not state that each disc has one eigenvalue). E.g. \(C_3\) on the right side does not contain any eigenvalue. This is why the theorem makes only a statement about the complete union and not each disc independently. Additionally, you can also see that one disc can be completely contained inside another disc as it is the case with \(C_2\) which lies inside \(C_1\). In this case, \(C_2\) does not give any useful information at all, since it does not expand the union \(C\) (if \(C_2\) would be missing, nothing changes regarding the complete union of all discs, i.e. \(C=C_1 \cup C_2 \cup C_3 = C_1 \cup C_3\)).

If we want to estimate the range in which the eigenvalues of \(A\) will lie, we can use the extrema values from the union, e.g.

\begin{equation*} \left[4-18; 22+10\right]_{\operatorname{Re}} = \left[-14; 32\right]_{\operatorname{Re}} \quad \text{and} \quad \left[0 - 18; 0 + 18\right]_{\operatorname{Im}} = \left[-18; 18 \right]_{\operatorname{Im}} \end{equation*}for the real and complex range respectively. This defines nothing else than a rectangle containing all discs. Of course, the rectangle is an even more inaccurate estimation as the discs already are, but the ranges are easier to handle (e.g. to decide if a given point lies inside the valid range or not). Furthermore, if we have more information about the matrix, like that it is symmetric and real-valued and therefore contains only real eigenvalues, we can discard the complex range completely.

In summary, with the help of the Gershgorin circle theorem, it is very easy to give an estimation of the eigenvalues of some matrix. We only need to look at the diagonal elements and corresponding sum of the rest of the row and get a first estimate of the possible range. In the next part, I want to discuss why this estimation is indeed correct.

Why it works

Let's start again with a 3-by-3 matrix called \(B\) but now I want to use arbitrary coefficients

\begin{equation*} B = \begin{pmatrix} b_{11} & b_{12} & b_{13} \\ b_{21} & b_{22} & b_{23} \\ b_{31} & b_{32} & b_{33} \end{pmatrix}. \end{equation*}Any eigenvalue \(\lambda\) with corresponding eigenvector \(\fvec{u} = (u_1,u_2,u_3)^T\) for this matrix is defined as

\begin{align*} B\fvec{u} &= \lambda \fvec{u} \\ \begin{pmatrix} b_{11} & b_{12} & b_{13} \\ b_{21} & b_{22} & b_{23} \\ b_{31} & b_{32} & b_{33} \end{pmatrix} \begin{pmatrix} u_{1} \\ u_{2} \\ u_{3} \end{pmatrix} &= \lambda \begin{pmatrix} u_{1} \\ u_{2} \\ u_{3} \end{pmatrix} \end{align*}Next, see how the equation for each component of \(\fvec{u}\) looks like. I select \(u_1\) and also assume that this is the largest absolute1 component of \(\fvec{u}\), i.e. \(\max_i{\left|u_i\right|} = \left|u_1\right|\). This is a valid assumption since one component must be the maximum and there is no restriction on the component number to choose for the next discussion. For \(u_1\) it results in the following equation which will be directly transformed a bit

\begin{align*} b_{11}u_1 + b_{12}u_2 + b_{13}u_3 &= \lambda u_1 \\ b_{12}u_2 + b_{13}u_3 &= \lambda u_1 - b_{11}u_1 \\ \left| u_1 (\lambda - b_{11}) \right| &= \left| b_{12}u_2 + b_{13}u_3 \right| \\ \end{align*}All \(u_1\) parts are placed on one side together with the diagonal element and I am only interested in the absolute value. For the right side, there is an estimation possible

\begin{equation*} \left| b_{12}u_2 + b_{13}u_3 \right| \leq \left| b_{12}u_2 \right| + \left| b_{13}u_3 \right| \leq \left| b_{12}u_1 \right| + \left| b_{13}u_1 \right| = \left| b_{12} \right| \left| u_1 \right| + \left| b_{13} \right| \left| u_1 \right| \end{equation*}First, two approximations: with the help of the triangle inequality for the \(L_1\) norm2 and with the assumption that \(u_1\) is the largest component. Last but not least, the product is split up. In short, this results to

\begin{align*} \left| u_1 \right| \left| (\lambda - b_{11}) \right| &\leq \left| b_{12} \right| \left| u_1 \right| + \left| b_{13} \right| \left| u_1 \right| \\ \left| \lambda - b_{11} \right| &\leq \left| b_{12} \right| + \left| b_{13} \right| \\ \left| \lambda - b_{11} \right| &\leq r_1 \\ \end{align*}where \(\left| u_1 \right|\) is thrown away completely. This states that the eigenvalue \(\lambda\) lies in the radius of \(r_1\) (cf. \eqref{eq:GershgorinCircle_Disc_RowSum}) around \(b_{11}\) (the diagonal element!). For complex values, this defines the previously discussed discs.

Two notes on this insight:

- The result is only valid for the maximum component of the eigenvector. Note also that we usually don't know which component of the eigenvector is the maximum (if we would now, we probably don't need to estimate the eigenvalues in the first place because we already have them).

- In the explanation above only one eigenvector was considered. But usually, there are more (e.g. usually three in the case of matrix \(B\)). The result is therefore true for each maximum component of each eigenvector.

This also implies that not every eigenvector gives new information. It may be possible that for multiple eigenvectors the first component is the maximum. In this case, one eigenvector would have been sufficient. As an example, let's look at the eigenvectors of \(A\). Their absolute value is defined as (maximum component highlighted)

\begin{equation*} \left| \fvec{u}_1 \right| = \begin{pmatrix} {\color{Aquamarine}1.31711} \\ 0.40112 \\ 1 \end{pmatrix}, \quad \left| \fvec{u}_2 \right| = \begin{pmatrix} {\color{Aquamarine}1.33013} \\ 0.431734 \\ 1 \end{pmatrix} \quad \text{and} \quad \left| \fvec{u}_3 \right| = \begin{pmatrix} 5.83598 \\ {\color{Aquamarine}12.4986} \\ 1 \end{pmatrix}. \end{equation*}As you can see, the third component is never the maximum. But this is coherent to the example from above: the third disc \(C_3\) did not contain any eigenvalue.

What the theorem now does is some kind of worst-case estimate. We now know that if one component of some eigenvector is the maximum, the row corresponding to this component defines a range in which the eigenvalue must lie. But since we don't know which component will be the maximum the best thing we can do is to assume that every component was the maximum in some eigenvector. In this case, we need to consider all diagonal elements and their corresponding absolute sum of the rest of the row. This is exactly what is done in the example from above. There is another nice feature which can be derived from the theorem when we have disjoint discs. This will be discussed in the next section.

The story about disjoint discs

Additional statements can be extracted from the theorem when we deal with disjoint disc areas3. Consider another example with the following matrix

\begin{equation*} D= \begin{pmatrix} 1 & -2 & 3 \\ 0 & 6 & 1 \\ 3 & 0 & 9+10 i \\ \end{pmatrix}. \end{equation*}Using Geshgorin discs this results in a situation like shown in the following figure.

This time we have one disc (centred at \(d_{33}=9+10i\)) which does not share a common area with the other discs. With other words: we have two disjoint areas. The question is: does this gives us additional information?. Indeed, it is possible to state that there is exactly one eigenvalue in the third disc.

Let \(A \in \mathbb{R}^{n \times n}\) be a square matrix with \(n\) Gershgorin discs (\eqref{eq:GershgorinCircle_Disc}). Then, each joined area defined by the discs contains so many eigenvalues as discs contributed to the area. If the set \(\tilde{C}\) contains \(k\) discs which are disjoint from the other \(n-k\) discs, then \(k\) eigenvalues lie in the range defined by the union

\begin{equation*} \bigcup_{C \in \tilde{C}} C \end{equation*}of the discs in \(\tilde{C}\).

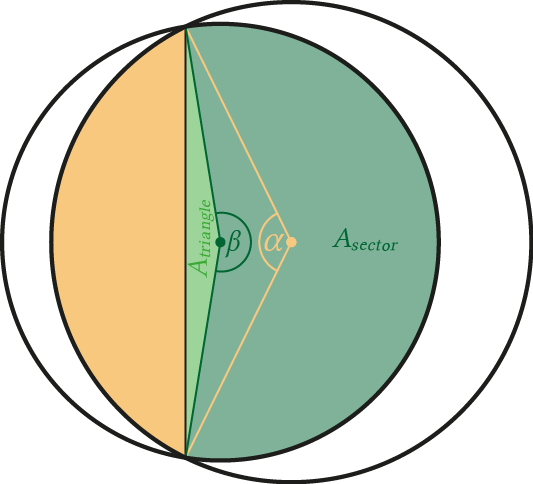

In the example, we have exactly one eigenvalue in the third disc and exactly two eigenvalues somewhere in the union of disc one and two4. Why is it possible to restrict the estimation when we deal with disjoint discs? To see this, let me first remind you that the eigenvalues of any diagonal matrix are exactly the diagonal elements itself. Next, I want to define a new function which separates the diagonal elements from the off-diagonals

\begin{align*} \tilde{D}(\alpha) &= \begin{pmatrix} 1 & 0 & 0 \\ 0 & 6 & 0 \\ 0 & 0 & 9+10 i \end{pmatrix} + \alpha \begin{pmatrix} 0 & -2 & 3 \\ 0 & 0 & 1 \\ 3 & 0 & 0 \end{pmatrix} \\ \tilde{D}(\alpha) &= D_1 + \alpha D_2 \end{align*}With \(\alpha \in [0;1]\) this smoothly adds the off-diagonal elements in \(D_2\) to the matrix \(D_1\) containing only the diagonal elements by starting from \(\tilde{D}(0) = \operatorname{diag}(D) = D_1\) and ending at \(\tilde{D}(1) = D_1 + D_2 = D\). Before we see why this step is important, let us first consider the same technique for a general 2-by-2 matrix

\begin{align*} F &= \begin{pmatrix} f_{11} & f_{12} \\ f_{21} & f_{22} \end{pmatrix} \\ \Rightarrow \tilde{F}(\alpha) &= F_1 + \alpha F_2 \end{align*}If we now want to calculate the eigenvalues for \(\tilde{F}\), we need to find the roots of the corresponding characteristic polynomial, meaning

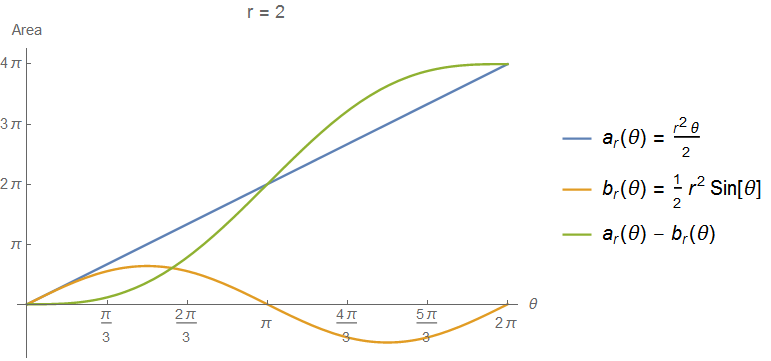

\begin{align*} \left| \tilde{F} - \lambda I \right| &= 0 \\ \left| F_1 + \alpha F_2 - \lambda I \right| &= 0 \\ \left| \begin{pmatrix} f_{11} & 0 \\ 0 & f_{22} \end{pmatrix} + \alpha \begin{pmatrix} 0 & f_{12} \\ f_{21} & 0 \end{pmatrix} - \lambda \begin{pmatrix} 1 & 0 \\ 0 & 1 \end{pmatrix} \right| &= 0. \end{align*}The solution for the first root of this polynomial and therefore the first eigenvalue is defined as

\begin{equation*} \lambda(\alpha) = \frac{1}{2} \left(-\sqrt{{\color{Aquamarine}4 \alpha ^2 f_{12} f_{21}} +f_{11}^2+f_{22}^2-2 f_{11} f_{22}}+f_{11}+f_{22}\right). \end{equation*}The thing I am driving at is the fact that the eigenvalue \(\lambda(\alpha)\) changes only continuously with increasing value of \(\alpha\) (highlighted position) and as closer \(\alpha\) gets to 1 as more off-diagonals are added. Especially, \(\lambda(\alpha)\) does not jump suddenly somewhere completely different. I chose a 2-by-2 matrix because this point is easier to see here. Finding roots of higher dimensional matrices can suddenly become much more complicated. But the statement of continuously increasing eigenvalues stays true, even for matrices with higher dimensions.

No back to the matrix \(\tilde{D}(\alpha)\) from the example. We will now increase \(\alpha\) and see how this affects our discs. The principle is simple: just add both matrices together and apply the circle theorem on the resulting matrix. The following animation lets you perform the increase of \(\alpha\).

As you can see, the eigenvalues start at the disc centres because here only the diagonal elements remain, i.e. \(\tilde{D}(0) = D_1\). With increasing value of \(\alpha\) more and more off-diagonal elements are added letting the eigenvalues move away from the centre. But note again that this transition is smooth: no eigenvalue suddenly jumps to a completely different position. Note also that at some point the discs for the first and second eigenvalue merge together.

Now the extended theorem becomes clear: if the eigenvalues start at the disc centres, don't jump around and if the discs don't merge at \(\alpha=1\), then each union must contain as many eigenvalues as discs contributed to this union. In the example, this gives us the proof that \(\lambda_3\) must indeed lie in the disc around \(d_{33}\).

List of attached files:

- GershgorinCircle.nb [PDF] (Mathematica notebook for the first example about the general theorem)

- GershgorinCircle_DisjointDiscs.nb [PDF] (Mathematica notebook for the second example about the extended theorem including the animation)